3D SLAM with ZED Camera

1. Objective¶

This was my first project with using SLAM. The objective of this project was to 3D map the Lab space with ZED Stereo Camera, Lidar, and JACKAL. My partner and I used RTAB-MAP for integrating the odometry data, depth data, and RGB data from the different sensors, and displayed using RVIZ.

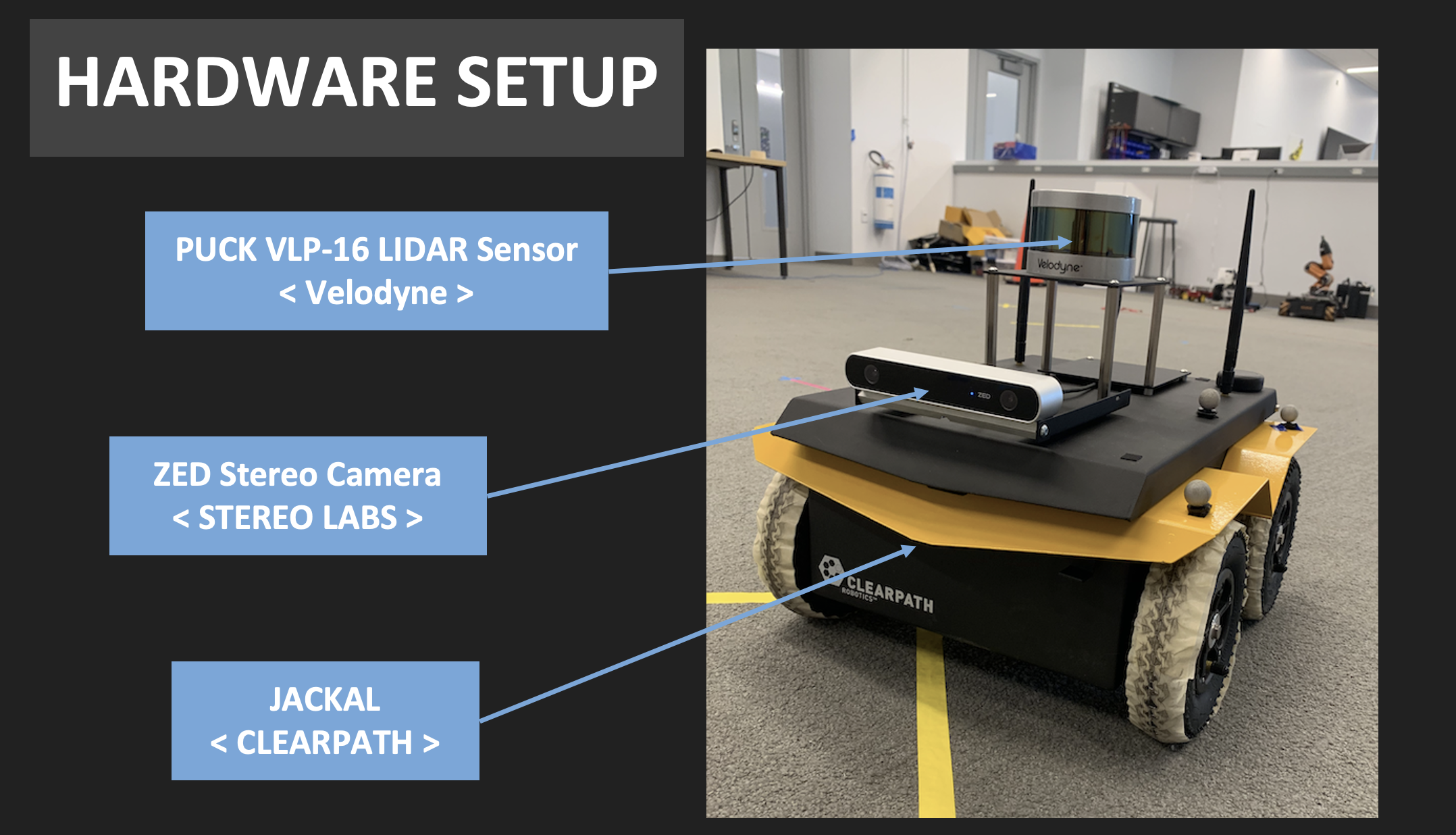

2. Hardware Setup¶

JACKAL from Clearpath was used for this project. For multiple SLAM purposes, ZED Stereo camera and Velodyne Lidar were equipped on top of Jackal. For this particular project, only ZED camera was used to obtain information.

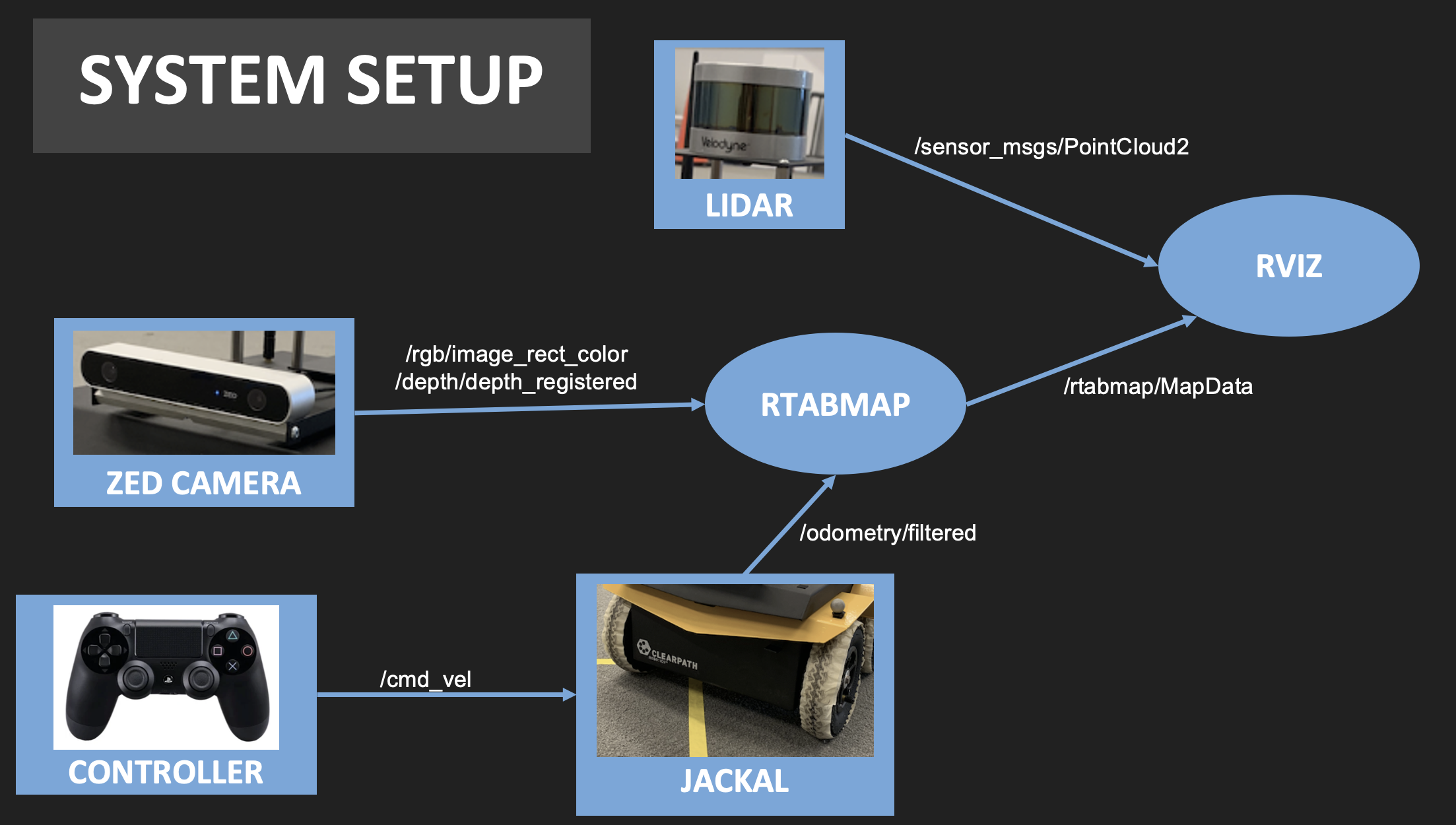

3. System Setup¶

This diagram explains the overall system setup and data used to construct the map in ROS. ZED Stereo Camera publishes to a topic named '/rgb/image_rect_color' and '/depth/depth_registered', with the message type of 'sensor_msgs/Image'. The control of JACKAL was done by a Playstation Controller, which publishes to a topic named '/cmd_vel.' JACKAL also publishes its odomoetry information to '/odometry/filtered', which then RTAB-MAP subscribes to '/odometry/filtered', '/rgb/image_rect_color' and '/depth/depth_registered', and integrates the three into '/rtabmap/MapData.' RVIZ subscribes to '/rtabmap/MapData', and visualize it on the screen. In this project, LiDAR information is not used, but as it publishes a high-quality point cloud, we used this information to compare with the map generated by RTABMAP.

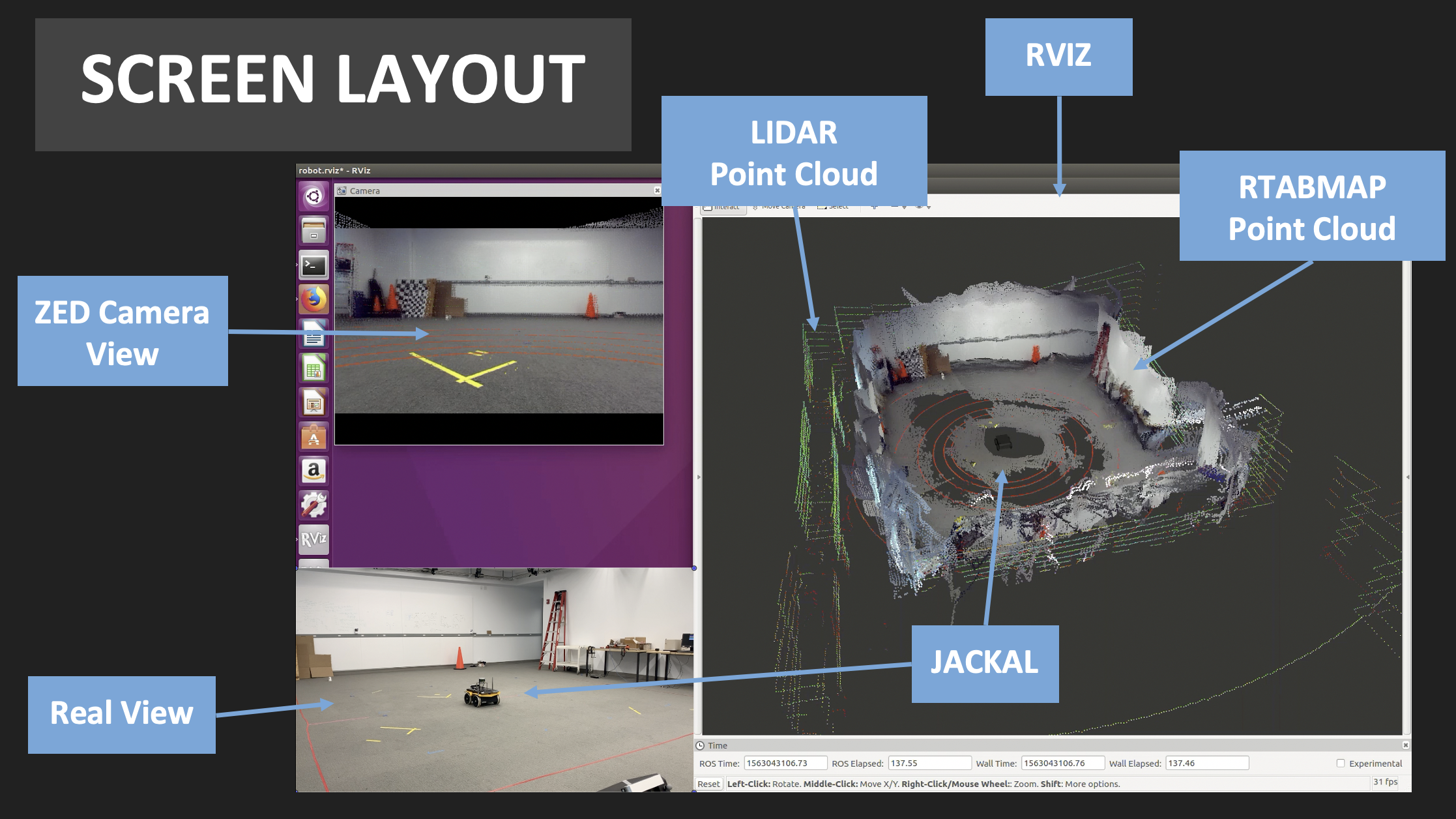

4. Screen Layout¶

This diagram shows the screen layout while we conducted experiments. ZED Camera view shows the point of view from JACKAL with the camera. Real view was from our perspective to navigate JACKAL around the room. Through RVIZ, comparison with LiDAR information and mapping done by JACKAL using the camera is shown.

5. Feature Detection Algorithm¶

There are many algorithms available which vary in the quality and speed. We tried FAST, SURF, and ORB, but SIFT worked the best in this project. Out of multiple feature detection algorithms, we decided to use 'SIFT': Scale-Invariant Feature Transform. A detailed explanation of the algorithm can be found here: Algorithm Explanation.

Selection of a feature detection algorithm was important in this project. Based on the detected features, the captured images from ZED camera are connected to each other and get combined into a map. Also, after a loop around (360°), the loop-closure does not occur without enough features found in the images.

6. Parameter Tuning¶

There were many parameters from ZED Stereo Camera and RTAB-MAP launch files that had been tuned for sake of better quality in mapping process. We went through rigorous trial and error to produce the best map as possible.

8. Supplementary Materials

The presentation slide can be found above.

7. References¶

We would like to especially thank Mathieu Labbe from RTAB-MAP for answering all our questions.

http://wiki.ros.org/rtabmap_ros/Tutorials/HandHeldMapping https://github.com/introlab/rtabmap/wiki/Tutorials http://wiki.ros.org/rtabmap_ros/Tutorials/SetupOnYourRobot