3D SLAM with Lidar

1. Objective¶

Upon completion of the SLAM with ZED Camera project, we pursued with SLAM with LiDAR. The objective of this project was to 3D map the fifth floor of Upson Hall at Cornell University with Lidar, ZED Stereo Camera, and JACKAL. The process is similar to the previous project. We used RTAB-MAP for integrating the odometry data, depth data, and RGB data from the different sensors, and displayed it on RVIZ.

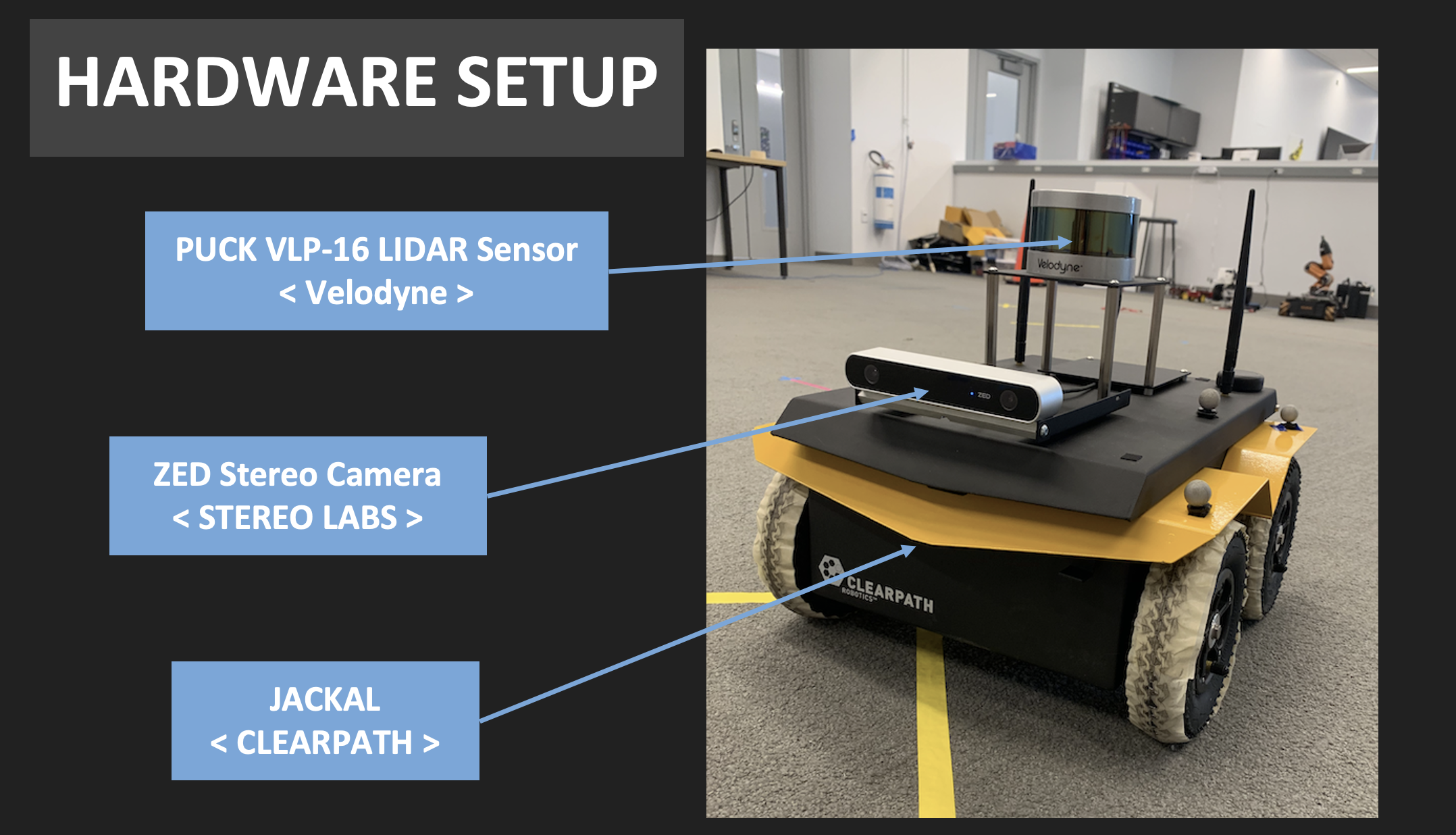

2. Hardware Setup¶

The hardware set up is the same as we used the same JACKAL.

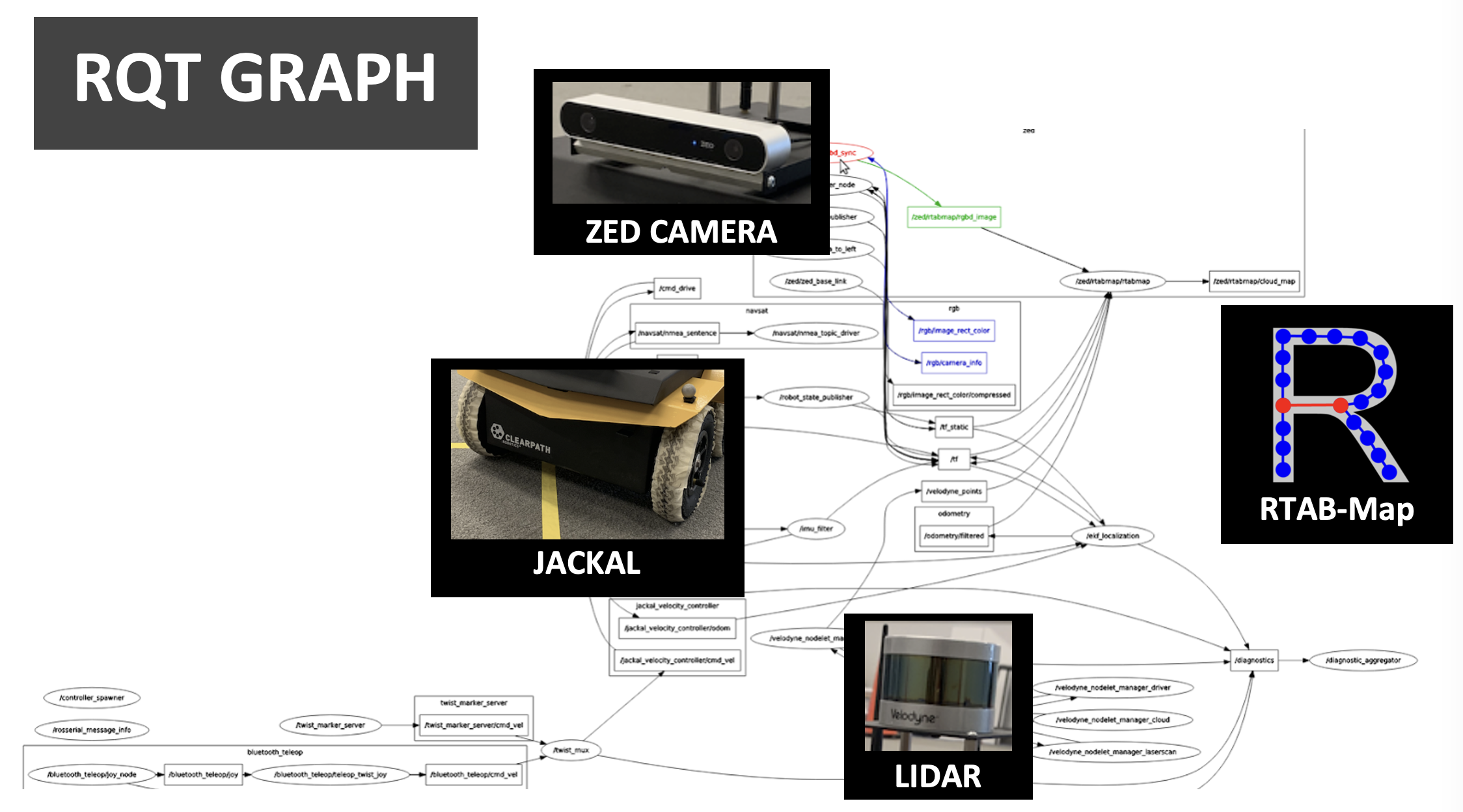

3. RQT Graph¶

This diagram explains how the data from each component gets combined into a 3D map.

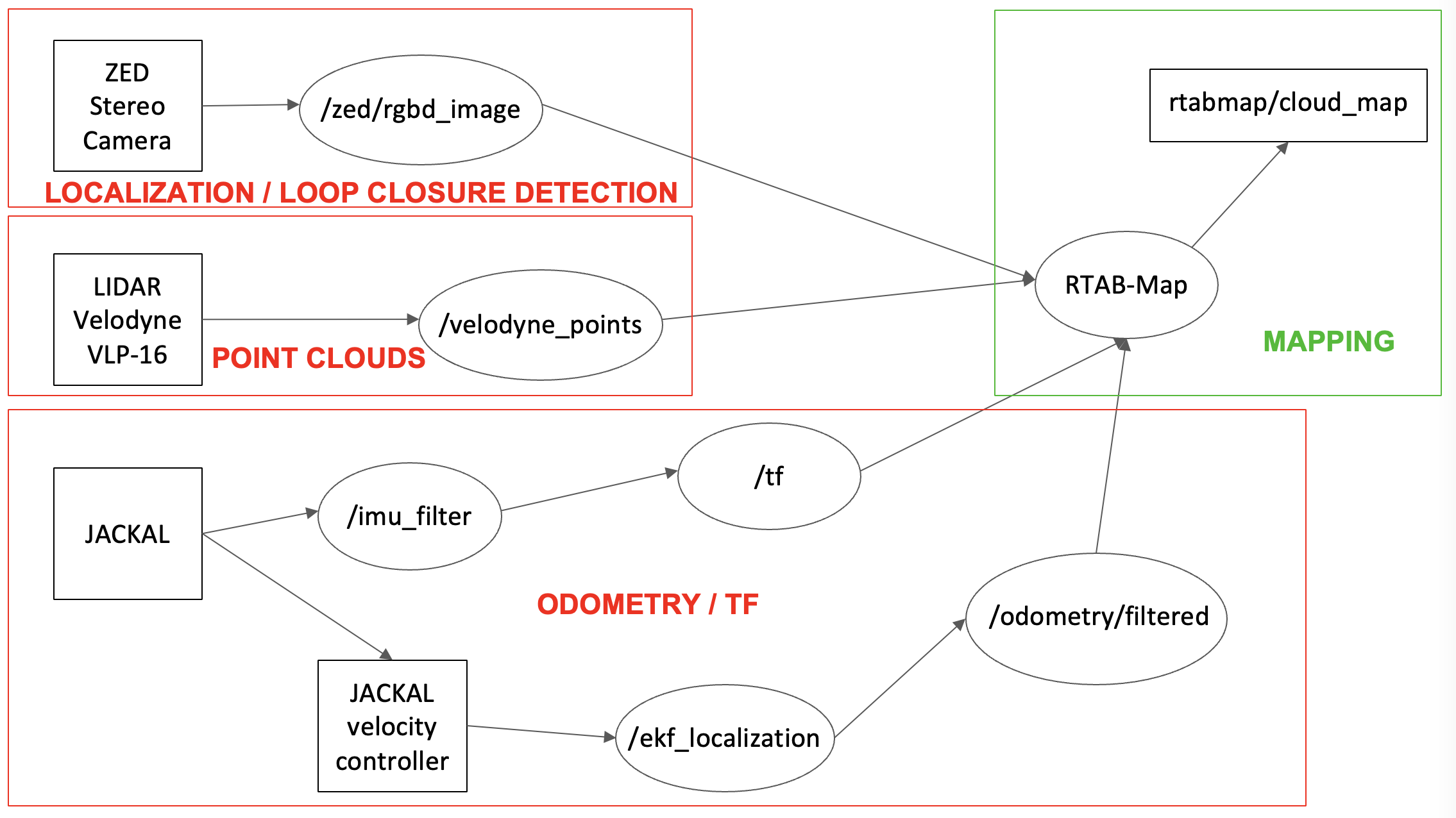

4. Simplified RQT Graph¶

Obviously, the system can be divided into 3 big components: Lidar, Jackal, and ZED. ZED Camera is added to the system for loop closure detection based on its RGBD image, but it did not improve the system very much. The mapping is basically done with the pose and odometry published from JACKAL with the point clouds published from Lidar. The RTAB-Map software integrates the data from two (or three including ZED) into a 3D map as shown in the demonstration video.

5. Parameter Tuning¶

There were many parameters from ZED Stereo Camera and RTAB-MAP launch files that had been tuned for sake of better quality in mapping process. We went through rigorous trial and error to produce the best map as possible.

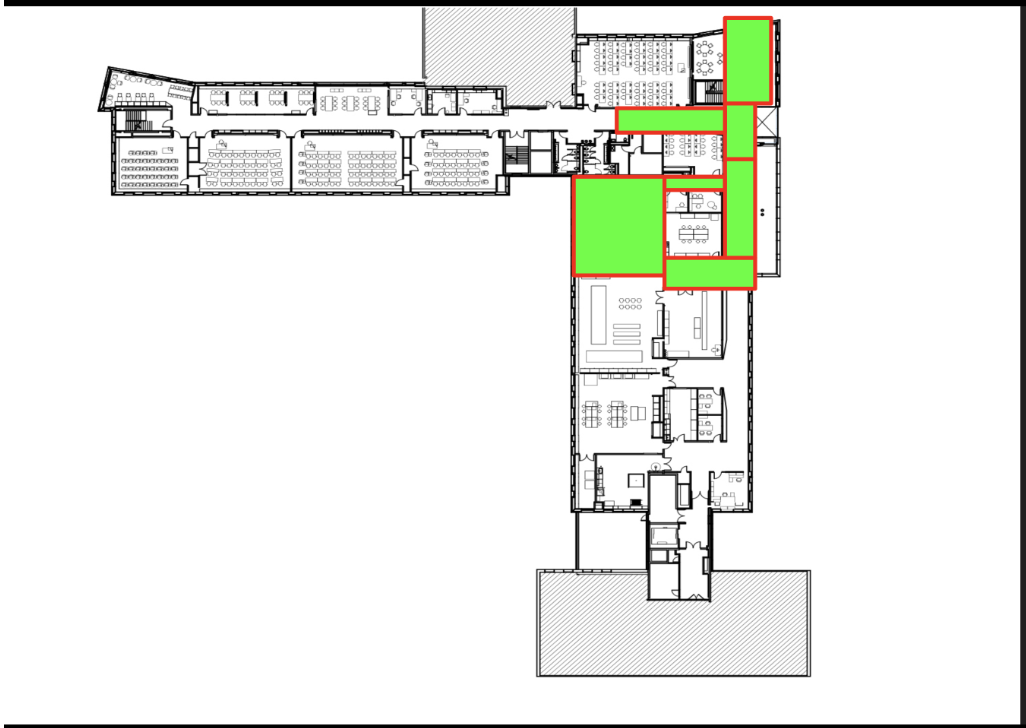

6. Mapped Area¶

7. Supplementary Materials

The presentation slide can be found above.

8. Reference¶

http://wiki.ros.org/rtabmap_ros/Tutorials/HandHeldMapping https://github.com/introlab/rtabmap/wiki/Tutorials http://wiki.ros.org/rtabmap_ros/Tutorials/SetupOnYourRobot